AI Trends in 2026: A Visionary Yet Grounded Forecast

Published: 06.08.2025

Estimated reading time: 30 minutes

The field of artificial intelligence is advancing at an astonishing pace – faster than many can keep up with. By 2025, generative AI and large language models (LLMs) went mainstream, and 2026 promises even more transformative shifts. From breakthrough technologies like multimodal AI assistants to evolving regulations and societal changes, the AI landscape is poised for another leap. This article explores major AI trends expected in 2026, blending visionary developments with grounded insights. Whether you’re a creator, developer, startup founder, or enterprise leader, understanding these trends will help you prepare for the AI-driven future.

Technology Trends in 2026

Evolution of LLMs: GPT-5.5, Claude 4, and Beyond

Bigger, smarter models: The next generation of LLMs is on the horizon. By 2026 we expect new versions (hypothetically GPT-5.5 from OpenAI, Claude 4 from Anthropic, etc.) that dramatically improve upon today’s capabilities. These models will likely feature expanded context windows, greater multimodal understanding, and more efficient reasoning. For instance, Anthropic’s current Claude 2 already handles 100,000-token contexts (around 75,000 words) – letting it digest books or hours of conversation in one go. Future GPT-5+ models may push context limits even further, enabling long-term memory and more coherent dialogues.

Multimodal intelligence: LLM evolution isn’t just about size; it’s about modality. GPT-4 introduced image understanding, and by 2026 it’s expected that flagship models will be fully multimodal – fluent in text, vision, audio, maybe even video. Google’s Gemini model is explicitly built to be natively multimodal, handling text, images, audio, code, and more. Tech industry observers note that models like GPT-4 Turbo and Google’s Gemini are “pushing boundaries” in 2026, allowing applications that see, hear, and respond like humans. In practice, this means an AI could analyze a photo, answer a spoken question about it, and generate a spoken response or even a brief video – all within one unified system. These richer capabilities pave the way for far more natural and powerful AI interactions.

Reasoning and specialization: We also anticipate improvements in the reasoning and reliability of LLMs. New training techniques and perhaps hybrid neuro-symbolic approaches could make GPT-5.5 or Claude 4 better at logic, math, and following complex instructions. At the same time, there’s a trend toward specialized LLMs – models fine-tuned for code, design, medicine, etc. By 2026 many industries will deploy domain-specific AI models that outperform general ones on niche tasks, while general LLMs become more of an all-purpose “brain” integrated into various tools.

Multimodal Agents and Agentic Workflows

One of the most game-changing trends is the rise of agentic AI – autonomous AI agents that can plan and act to accomplish goals with minimal hand-holding. Unlike traditional AI tools that wait for explicit prompts, agentic AI systems proactively make decisions and take multi-step actions. In 2026 this concept moves from experimentation into real-world adoption. “Agentic AI refers to autonomous, intelligent systems that adapt to changing environments, make complex decisions, and collaborate with other agents and humans,” as Deloitte explains. Early implementations in 2023, like AutoGPT, hinted at what’s possible; by 2026, advances in LLM reasoning and longer memory enable agents that truly execute workflows.

Expect to see multimodal AI agents that combine text, vision, and voice capabilities. These agents can use software tools, interact with web content, or control IoT devices as part of completing tasks. For example, a customer service bot might autonomously handle a support ticket: reading a user’s email, checking a knowledge base, pulling up account data, composing a response, and even triggering a follow-up call – all without a human in the loop. Businesses are starting to move from simple AI tools to autonomous AI workflows, deploying agents in customer support, supply chain management, finance (for compliance or fraud monitoring), and more.

Crucially, these AI agents will operate under new governance structures. As they become more capable, organizations must implement strict guardrails and oversight. It’s predicted that by 2026 companies will have “well-defined governance frameworks and usage guidelines” specifically for autonomous agents. New roles like “AI ops” or “agent wranglers” may emerge – teams responsible for monitoring and training AI agents. This trend goes hand-in-hand with improved AI observability (keeping logs of AI decisions, monitoring behavior) so that humans maintain insight and control over what these agents do.

Generative Video and Audio Breakthroughs

The generative AI boom began with text and image generation. Now, generative video and audio are approaching a similar inflection point. Between 2023 and 2025, we saw the early wave of text-to-video tools (e.g. Runway’s Gen-2, Meta’s Make-A-Video) and AI music generators. By 2026, expect state-of-the-art generative models that can produce short videos, music clips, and interactive media with remarkable quality from simple prompts. In fact, experts predict that “2026 is when actual multimodal experiences become mainstream”, as the foundations laid by 2023–2025 tools mature.

Generative video models are improving in resolution, coherence, and length of output. It’s plausible that 2026 will bring AI-generated videos that are indistinguishable from amateur human-made footage for certain use cases (like training videos, product explainers, or short advertisements). This opens up new creative and marketing possibilities – and challenges in verifying what’s real. Similarly, AI-generated audio (from synthetic voices to music composition) will reach new heights. We already have AI voices that mimic humans with eerie accuracy; going forward, AI music engines might let creators generate custom soundtracks on the fly, or even have virtual AI actors reading scripts with emotion and nuance.

These advances will likely be integrated into creative software and platforms. For example, a video editing suite in 2026 might allow a user to “generate B-roll of a sunset over a city skyline” or “auto-generate a backing track in the style of 90s pop” with one click. For content creators and media companies, generative video/audio can dramatically speed up production – though it also raises copyright and authenticity concerns (addressed later in this article). Overall, 2026 will see generative AI expanding beyond text and images into truly multimodal content creation. The combination of text, visuals, and sound generation means AI can produce entire multimedia experiences from a simple idea.

Personal AI Assistants and AI-Native UIs

The dream of a personal AI assistant – an ever-present AI helper that knows you and handles tasks – will edge closer to reality in 2026. Tech giants are racing to embed AI deeply into operating systems and everyday apps. We’re already seeing early steps: e.g. Microsoft’s Copilot in Windows and Office, or Google integrating AI into search and productivity tools. By 2026, these will evolve into more capable, personalized assistants. Google’s roadmap, for instance, envisions “a universal assistant” powered by advanced agentic models like Gemini that can take action on your behalf with supervision. In practice, your future AI assistant might schedule meetings, triage your email, do shopping comparisons, draft documents, or even control smart home functions – all through natural conversation.

AI-native user interfaces (UIs) are an emerging concept here. Instead of clicking through menus or typing queries, users will interact with software by simply asking or instructing in human language. An “AI-native UI” means the interface is built around AI’s capabilities – for example, a project management app where you just tell an AI “organize tasks for this week and notify the team,” rather than manually configuring settings. This shift from traditional UI to conversational or proactive UI could be as transformative as the move to touchscreens was. Companies are already exploring UIs driven by prompts and chat, which dynamically handle user needs in ways static menus cannot.

By 2026, expect many applications and devices to have an AI agent “under the hood.” Voice assistants will grow up from simple Q&A bots to true concierge services. Imagine an AI in your phone or AR glasses that observes your context and offers help unasked – like suggesting, “You seem to be driving to a new place, shall I pull up directions and the weather there?” These AI companions will leverage multimodal perception (camera, mic, location data) to be more context-aware.

For creators and developers, AI-native UIs mean rethinking product design. It’s a move from user-initiated interactions to AI-initiated support and from fixed design elements to fluid, generated responses. Early adopters of this trend report that AI-driven interfaces can wow users with personalized, interactive experiences beyond what static UIs offer. However, designing good AI interactions (prompt design, response tuning) becomes as important as graphic design in the AI-native era.

Policy, Governance, and Societal Shifts

Global AI Regulation Gathers Momentum

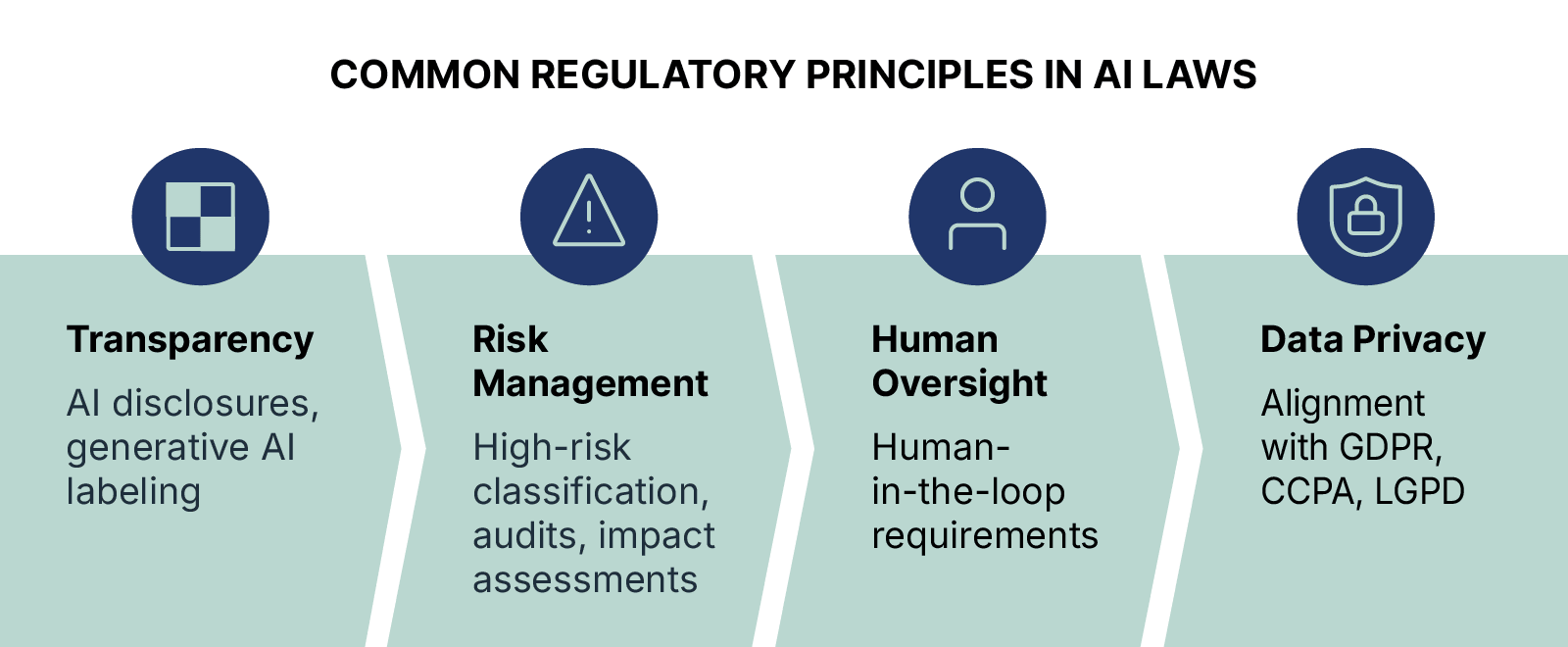

The policy landscape around AI is finally catching up. In 2026, we’ll see significantly more regulatory oversight on AI in major jurisdictions. Europe is leading the way with the EU AI Act – the world’s first comprehensive AI law – which begins enforcement in phases from 2025 into 2026. This landmark legislation imposes strict rules on “high-risk” AI systems (like those used in healthcare, hiring, or transportation) and even addresses general-purpose models. For example, from 2025 the EU will ban AI systems posing unacceptable risks (e.g. social scoring) and require transparency for AI that generates content, and by August 2026 new rules for high-risk AI systems take effect. Providers of large models will need to “establish policies to respect EU copyright laws” and publish summaries of their training data, per the AI Act’s requirements – a response to concerns about AI training on copyrighted works.

North America is also moving, albeit more cautiously. The United States doesn’t yet have a single AI law, but there’s growing pressure for AI governance. The US government has issued AI risk management frameworks and secured voluntary safety commitments from AI companies. Several states have begun enacting laws around AI in specific domains (like Illinois’s AI hiring act, etc.). By 2026, we might see the introduction of federal AI legislation or at least sector-specific regulations (for privacy, autonomous vehicles, etc.). Globally, “governments will continue to introduce new regulations governing data privacy, security, and AI governance,” with varied approaches. Deloitte analysts note that regulatory scrutiny is increasing worldwide – the EU has clear rules, while the outlook in the US is still uncertain as early-stage bills develop. In Asia, China implemented its Generative AI Measures in 2023, requiring security reviews and content controls for AI services, and other countries like Japan, India, and Singapore are formulating their own AI guidelines.

For companies and AI developers, the regulatory momentum means compliance can’t be an afterthought. Organizations are advised to “proactively implement robust compliance programs — encompassing transparency, explainability, and continuous monitoring — not only to avoid legal penalties… but also to preempt regulation.” In practice, this involves steps like model documentation, bias audits, data governance checks, and AI output labeling. By 2026, having an AI governance team or at least clear AI policies will be common in mid-to-large enterprises. Regulations may also spur innovation in AI safety tech – tools for dataset inspection, bias mitigation, and usage tracking – as businesses seek to meet new standards.

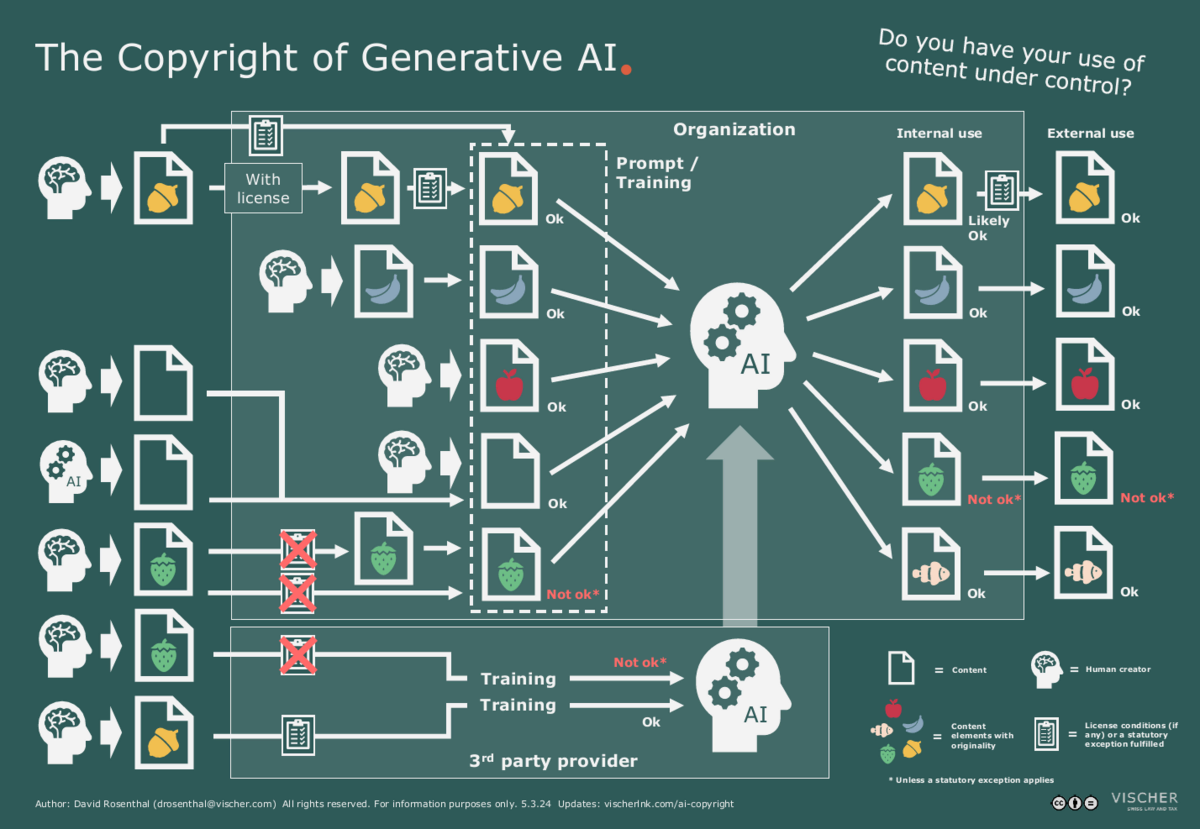

Copyright and AI Liability Under Scrutiny

The legal system in 2026 will be grappling with fundamental questions: Who owns AI-generated content? And who is liable when AI goes wrong? Copyright law is in the midst of reform to address AI. Creators and content owners have raised alarms about AI models training on their work without compensation, and about AI outputs that mimic copyrighted style. In response, courts and lawmakers are testing new ground. We’ve seen lawsuits by artists and authors against AI firms for scraping content, and by 2026 some precedent may emerge on whether training data use falls under “fair use” or requires licensing. The EU AI Act’s provision on respecting copyright (mentioned above) hints that AI developers may need to obtain rights or use public domain data for training in the future. Additionally, there are discussions about giving legal protection (copyright) to AI-generated works – most jurisdictions don’t recognize AI as an author, but if AI creations become ubiquitous, laws could change to grant at least partial rights to the humans who prompt or publish AI content.

AI liability is another hot issue. Today, if an autonomous vehicle causes an accident or an AI medical tool gives a faulty recommendation, it’s unclear who bears responsibility – the manufacturer, the software developer, the user, or even the AI itself (in a legal sense)? The EU had proposed an AI Liability Directive to make it easier for victims to claim damages from AI-related harms, but as of early 2025 that proposal was withdrawn after failing to reach agreement. This underscores how challenging it is to set liability rules for AI. In the absence of new laws, expect regulators to extend existing product liability and consumer protection frameworks to AI systems. For example, manufacturers might be held liable for defects in an AI system analogously to a defective appliance.

By 2026 we anticipate more clarity in some areas: for critical applications like self-driving cars or AI in healthcare, governments might impose mandatory insurance or no-fault compensation schemes to ensure victims are covered regardless of proving negligence. There’s also likely to be a push for transparent logging in AI (so that when something goes wrong, it’s easier to audit what the AI did). Overall, companies deploying AI at scale are encouraged to build in “trust layers” – e.g. fail-safes, human override mechanisms, audit trails – to minimize harm and demonstrate diligence. The importance of explainability, observability, and trust in AI is not just an ethical concern but a pragmatic one: it mitigates legal risk and builds public confidence. Industry voices advise focusing on ethical and explainable AI to build trust with customers and stakeholders in this uncertain legal environment.

Read more ->

Workforce Impact: AI Job Augmentation vs. Displacement

Few debates are as intense as the one about AI’s impact on jobs. Will AI steal jobs or supercharge them? The truth in 2026 is likely “both, and” – but there’s growing evidence that augmentation will be the dominant theme in the near term. Rather than wholesale replacement, AI is being used to assist workers, boosting productivity. In fact, 2023’s generative AI breakthroughs led many companies to deploy AI copilots across roles – from coding assistants for developers to AI note-takers for meetings. By 2026 this trend solidifies into what’s called an AI-augmented workforce. As one industry analysis put it, “In 2026, AI isn’t replacing humans, it’s enhancing human performance”, with employees getting digital co-pilots to handle routine tasks and provide intelligent insights. Mundane workload (data entry, first-draft writing, basic analysis) can be offloaded to AI, freeing humans for higher-level creative, strategic, or interpersonal duties.

Concrete examples abound: Customer support agents use AI to draft responses and summarize cases, marketers use AI to generate campaign content ideas, and analysts rely on AI to sift big data for trends. Productivity studies in 2024–25 have shown significant time savings when humans work with AI assistance. As a result, many employers are reframing roles to leverage AI – rewriting job descriptions to include AI tool usage, and valuing “AI literacy” as a key skill. A recent World Economic Forum report noted 66% of business leaders say they wouldn’t hire someone without AI skills, yet only 25% of companies offer AI training so far. This indicates a skills gap that organizations are racing to close through reskilling programs (more on that below).

That said, displacement is not off the table. Certain tasks and even entire job categories will be phased out or reduced due to AI automation. Administrative roles, basic accounting, transcription, and some customer service jobs face high exposure. The World Economic Forum projects that technology (including AI) will “transform 1.1 billion jobs” over the next decade – meaning many jobs will change in nature, and some will disappear while new ones emerge. The key challenge is managing this transition humanely. By 2026 we expect increased investment in upskilling and transition support. Governments and companies are funding programs to train workers for new roles (for example, training warehouse clerks to oversee AI-driven logistics systems, or upskilling call center employees to become AI supervisors who handle complex cases escalated by bots).

Encouragingly, many leaders emphasize a “people + AI” symbiosis rather than an AI takeover. Vimal Kapur, CEO of Honeywell, described how in industrial settings AI augments human workers instead of replacing them, acting as a “partner and advisor” that can train less experienced employees on the job. This approach – using AI to amplify human expertise – is gaining traction. We see it in “fusion teams” where staff work alongside AI systems, and in job redesigns that pair a human’s judgment with an AI’s efficiency. Policymakers in 2026 may also encourage this balance by incentivizing companies to retain and retrain staff rather than simply automate and cut. In summary, while some job displacement will occur, the near-term narrative is tilting toward augmentation, with humans and AI working together to achieve more. The net effect on employment by 2026 will vary by industry, but those organizations that proactively reskill their workforce and integrate AI thoughtfully are likely to both improve productivity and keep employees relevant.

Education and Reskilling Trends

With AI altering the skills landscape, education and training are undergoing a transformation. Both the content of education and the methods of delivery are adapting for an AI-driven world. By 2026, expect mainstream curricula – from K-12 to universities and corporate training – to include AI components. This means not only computer science or data science students learn about AI; everybody from MBAs to marketing majors might have a course on AI fundamentals or how to work with AI tools. There’s also a surge in online courses, nano-degrees, and certification programs focusing on AI skills (e.g. prompt engineering, AI ethics, machine learning operations).

One big trend is reskilling the current workforce at scale. Many governments see this as critical to remain competitive and to avoid unemployment spikes. For instance, Microsoft reports it has helped train over 14 million people in AI skills through global initiatives, and it launched an AI Skills Navigator to guide workers to appropriate learning resources. Such public-private partnerships will expand by 2026, aiming to reach tens of millions more learners worldwide. We’re also seeing companies incorporate continuous learning as part of their culture – some are even using AI-powered personalized learning to upskill employees, delivering custom training based on an employee’s role and progress.

In schools and universities, AI’s role as a teaching aid is controversial but growing. By 2026, more educators may use AI tutors to supplement lessons, and AI tools to help with grading or creating personalized study plans. This can enhance learning outcomes if done right. On the flip side, educators are also teaching students how to use AI responsibly (for example, using AI for research versus cheating). Academic institutions are updating policies to handle AI-generated work and emphasizing critical thinking and creativity – skills where humans will still have an edge.

Another aspect is lifelong learning becoming the norm. The pace of AI innovation means skills can become obsolete in a few years. Workers are increasingly aware they must periodically reskill throughout their careers. As one expert noted, “this shift demands innovative approaches to reskilling, focusing on helping workers understand both AI’s evolving capabilities and its limitations”. In practice, this might involve mid-career professionals taking sabbaticals or dedicated training stints to learn new AI tools or even switch careers (e.g. a truck driver learning to supervise automated fleets, or a graphic designer learning to work with generative image models).

Key reskilling trends heading into 2026 include: community colleges and vocational programs adding AI modules, industry-led “bootcamps” for in-demand AI skills, and even labor unions negotiating for retraining benefits. We must also mention that AI itself is being used to identify skill gaps and recommend training within organizations, making the reskilling process more data-driven. The big picture is optimistic – many studies (like PwC’s AI Jobs Barometer) suggest AI will “make people more valuable, not less” if we successfully upgrade human skills alongside AI advancement. Education systems in 2026 are pivoting to meet that challenge, aiming to produce an agile, AI-savvy workforce for the new era.

Predictions for 2026

Market Consolidation vs. Open-Source Boom

The AI industry in 2026 stands at a crossroads: will a few tech giants dominate (market consolidation), or will open-source and decentralized efforts flourish (an open-source boom)? In reality, we’re likely to see both trends in tension. On one hand, large players like OpenAI/Microsoft, Google/DeepMind, Amazon, and Meta have enormous resources to train frontier models – the cost to build the absolute best models is rising into the tens (or hundreds) of millions of dollars, which could create a barrier to entry for smaller firms. This suggests a consolidation where AI power is concentrated in a handful of companies offering AI-as-a-service (APIs, cloud platforms, etc.). We’ve already seen big companies acquiring AI startups at a rapid clip in 2024–25; by 2026, some segments (like autonomous driving AI or enterprise AI platforms) might be dominated by 2–3 major providers.

On the other hand, the open-source AI movement has gained remarkable momentum. 2023’s release of Meta’s Llama 2 LLM (and other open models) ignited a community-driven surge in AI development. As of early 2025, a McKinsey survey found over 50% of organizations were using open-source AI tools or models in some part of their tech stack. These include models like Meta’s Llama family, Google’s “Gemini” family (some versions of which might be open or leaked), and others from academia and nonprofits. Many of these open models are closing the performance gap with proprietary ones, while offering benefits like transparency and cost savings. In fact, “more than three-quarters of AI practitioners surveyed expect to increase their use of open-source AI in the coming years,” indicating the trend is to adopt a mix of open and closed solutions.

So, what does this mean for 2026? It likely means a hybrid ecosystem. Big Tech will still lead in cutting-edge, large-scale AI offerings – often provided via cloud – but organizations will also leverage open-source models for flexibility and independence. We may see market consolidation in terms of AI infrastructure (e.g. a few cloud platforms hosting most AI workloads), while at the same time an open-source boom in model availability. One possible outcome is that tech giants themselves embrace more open models (as Meta did by open-sourcing Llama) to drive adoption, or they release community editions of their AI. Conversely, some open-source AI projects might get acquired or see corporate backing, blurring the lines.

Importantly, the open vs closed question has a trust and transparency dimension. Governments and companies concerned about dependency on foreign AI or about the opaqueness of closed models might prefer open ones for critical uses – this ties into the sovereign AI trend, where regions build their own AI capabilities locally. By 2026, we predict high-value niche AI (say, a medical diagnosis model or a national language model) might be developed openly by coalitions of public and private stakeholders, providing an alternative to relying solely on Big Tech.

In summary, expect continued open-source innovation (with faster model iterations shared by the community) alongside strategic consolidation (big companies integrating vertically and offering full-stack AI solutions). For businesses and developers, the strategic bet is to stay flexible: leverage the stability and scale of big providers where needed, but keep an eye on open-source advancements that could offer cost-effective alternatives or enable on-premises deployment with fewer restrictions.

From AI Tools to Autonomous Workflows

Thus far, many organizations have treated AI as a collection of tools – e.g. a chatbot here, a vision API there, a coding assistant for developers. By 2026, there’s a shift towards autonomous workflows powered by AI. Instead of isolated use cases, AI will be orchestrated across processes to handle end-to-end tasks. In other words, AI moves from the toolbox to the engine of operations. This is essentially the practical application of the agentic AI trend discussed earlier.

For instance, consider a sales workflow in 2023: a salesperson might use an AI email writer (tool) to help draft outreach, and perhaps an AI CRM plugin to prioritize leads. In 2026, that could evolve into an autonomous sales agent that continuously analyzes the sales pipeline, sends personalized emails to prospects, schedules follow-up meetings on the rep’s calendar, and reports insights – all automatically. The human in the loop then mainly oversees and handles high-level relationship building, while the AI workflow grinds through the busywork autonomously.

This “autonomy” trend will touch many domains: customer support (AI agents resolve most Tier-1 queries and only complex cases go to humans), IT operations (self-healing systems that detect and fix issues), marketing (AI systems that create, A/B test, and allocate budget for ads with minimal human input), and more. A Deloitte survey anticipates that by 2026, agentic AI will “move beyond pilot projects and become widely adopted across industries,” especially in larger organizations that have the resources to implement it. The proliferation of out-of-the-box AI agent solutions for common business processes will make it easier for companies to plug them in and automate workflows.

To enable this, software vendors are building agent frameworks and integrations. We see early signs with technologies like LangChain (for chaining AI tasks), Microsoft’s “Copilot stack” integrating across Office apps, and new developer APIs for creating multi-step AI flows. By 2026, many enterprise software platforms may come with built-in AI orchestration capabilities. Businesses will demand that their AI can not only provide insights but act on those insights (within set boundaries).

However, fully autonomous workflows also raise the stakes for governance. As mentioned, companies will need robust oversight – including simulation testing of AI workflows before deployment, continuous monitoring, and fallback plans if the AI fails. We predict the emergence of AI operations (AIOps) teams dedicated to managing fleets of AI agents, similar to how DevOps manage software infrastructure. New metrics like AI uptime, AI decision accuracy, and intervention rates will become key performance indicators. Essentially, running autonomous AI processes will be treated with the same rigor as running a critical IT system.

In conclusion, 2026 will mark a noticeable pivot from isolated AI-powered tasks to AI-managed processes. It’s a natural evolution as confidence in AI grows. Organizations that succeed here will likely be those that start small (prove out AI on a contained workflow) and then scale up, all while keeping humans in the loop strategically. The payoff is substantial – truly autonomous workflows could dramatically improve efficiency and allow human workers to focus on strategic, creative, or complex problem-solving areas that AI isn’t suited for.

🚀 Supercharge Your Prompts

promptXfactory

Stop wasting time on guesswork

Start building smarter prompts—faster

The Rise of AI Trust and Transparency Layers

As AI systems permeate every facet of business and daily life by 2026, the importance of trust becomes paramount. We’ve touched on explainability and governance; here we highlight the broader ecosystem likely to form around AI trust, safety, and observability. In the same way that cybersecurity grew into a massive field alongside the spread of the internet, AI assurance (ensuring AI is reliable, fair, and accountable) will be a booming area in the coming years.

One development is the creation of AI audit and validation services. Just as financial audits are standard, we may see third-party AI audits become routine – especially for high-stakes AI like financial algorithms, medical AIs, or any AI under regulatory oversight. These audits might examine a model’s training data for bias, test its decisions for fairness and consistency, and verify compliance with relevant standards. In fact, the EU AI Act essentially mandates a form of this for high-risk AI: requiring documentation of training data, risk management processes, and even “continuous monitoring” of AI performance in the field. So by 2026, many companies will have internal “model validation” teams or will hire external experts to certify their AI systems.

Another aspect is explainability tools becoming more widespread. Users and regulators will demand to know why an AI made a given recommendation or decision. We predict improved techniques to extract explanations from complex models (like LLMs or deep neural nets). These could be local explainers that highlight which parts of input influenced an output, or higher-level summaries of an AI’s “reasoning”. Especially in domains like finance, healthcare, or legal, providing an explanation will be crucial for trust – for example, an AI diagnostic tool might need to show the medical evidence it based its conclusion on. Organizations will likely favor AI solutions that come with built-in explainability features over “black box” models.

AI observability and logging will similarly see growth. In 2026, it won’t be acceptable to deploy an AI system and not know how it’s operating until something goes wrong. We’ll see advanced monitoring systems that track AI model outputs, detect anomalies (e.g. if the AI suddenly starts giving unusual answers, triggering an alert), and even trace decisions after the fact. For instance, if a content recommendation algorithm surfaces problematic content, the system should log the chain of data and internal states that led to that recommendation – aiding in debugging and accountability.

There’s also the human side of trust: user education and control. Applications will likely give users more settings to configure AI behavior (tone, aggressiveness, etc.) and easy ways to correct AI mistakes. This “human-in-the-loop” design builds trust because users feel they have oversight. By 2026, UI patterns for AI feedback (like thumbs-up/down, editing AI outputs, asking “why did you do that?”) will be standard in many AI-driven apps.

Finally, ethical AI frameworks will be in full force. Companies will have ethics committees or AI review boards to vet new AI deployments for societal impact. There may be industry-wide certifications or “AI safety” seals to reassure consumers that a product meets certain trust standards. All told, we are entering an era where simply having powerful AI is not enough – it must be trustworthy. Those who provide the “trust layer” – from bias mitigation tools to monitoring dashboards – will be in high demand. And those who implement AI with robust transparency and ethics considerations will have a competitive edge in winning customer confidence (and avoiding legal/regulatory pitfalls).

In summary, 2026’s AI landscape will be defined not just by what AI can do, but how it’s done. The three predictions above highlight that theme: open vs closed development speaks to transparency, autonomous workflows must be paired with oversight, and trust layers are necessary for societal acceptance. Companies and creators should watch these meta-trends as closely as the technology breakthroughs themselves.

What to Prepare For in 2026

To navigate the AI revolution in 2026, here are key actions and strategies to consider:

- Invest in AI Literacy and Skills: Make sure you and your team understand how to use AI tools. Provide training in AI fundamentals and emerging technologies (LLMs, prompt engineering, etc.) so everyone can work effectively with AI. A more AI-skilled workforce will be both more productive and more resilient to job changes.

- Implement Strong AI Governance: Establish clear policies for AI development and usage in your organization. This includes ethics guidelines, review processes for new AI applications, and compliance checks with emerging regulations. Treat AI with the same rigor as other critical business operations – including audit trails, quality assurance, and contingency plans for failures.

- Experiment (Responsibly) with Autonomous Workflows: Identify pilot projects where AI agents or automation could handle an entire workflow (customer support, marketing campaigns, etc.). Start small to prove value, but design with human oversight and safety in mind from day one. Successful pilots can then scale up, giving you a head start on the agentic AI trend.

- Leverage Open-Source and Proprietary AI Wisely: Stay informed on the latest open-source AI models that could reduce your costs or improve transparency. At the same time, work closely with trusted AI vendors for capabilities that require their scale or expertise. A balanced approach will keep you flexible amid market shifts in AI.

- Focus on Explainability and Trust: Make “ethical and explainable AI” a core principle of your AI strategy. Choose tools that allow you to explain AI decisions to users or regulators. Proactively address bias or safety issues in model outputs. Building trust with customers and stakeholders will pay off as AI becomes more integral to your products or services.

By anticipating these trends and preparing accordingly, creators, startups, and enterprises can ride the AI wave of 2026 rather than being swept away by it. The year ahead promises extraordinary opportunities for those who adapt – a chance to reimagine how we work, create, and innovate with AI as a powerful collaborator. The organizations that combine visionary boldness with responsible practices will likely lead the next chapter of the AI revolution.

Sources:

- Deloitte – “Three forces shaping the future of AI (2025-2026)” deloitte.com

- TechKors – “Top 5 AI Trends to Watch in 2026” techkors.com

- Google (DeepMind) – Sundar Pichai & Demis Hassabis announcements on Gemini 2.0 (2024) blog.google

- Anthropic – Claude 2 context window expansion to 100K tokens (2023) anthropic.com

- World Economic Forum – “Future of Jobs 2025” insights on reskilling and AI augmentation weforum.org

- IBM – Explainer on the EU AI Act timeline and requirements ibm.com

- McKinsey – “Open source AI in the enterprise” survey (2025) mckinsey.com